.jpg.png)

SVP Innovation

Introduction

Artificial intelligence (AI) agents are rapidly moving beyond chatbots and simple automation, promising to revolutionize enterprise workflows. From handling customer interactions to managing complex backend operations, their potential is immense. However, as enterprises look to deploy and scale these agents, a critical question arises: How do we effectively measure and compare their capabilities in a way that reflects the unique complexities of the enterprise environment?

Generic benchmarks, often focused on conversational ability or general knowledge, fall short. Enterprise tasks exist within a complex tapestry of security protocols, legacy systems, compliance requirements, and varying levels of operational criticality. To bring structure to this complexity, we propose viewing enterprise tasks through a layered model, reflecting the increasing depth and criticality of operations from the user interface down to core infrastructure. This framework, based on that model, aims to provide clarity and rigor in evaluating AI agents, enabling more informed decisions about development, deployment, and strategic investment.

The Challenge: Why Enterprise AI Benchmarking Needs Structure

Evaluating AI agents in an enterprise isn't just about task completion accuracy. It involves understanding performance across a spectrum of operational realities:

Without a framework that acknowledges these different operational contexts, benchmarking risks being superficial, potentially leading to misjudgments about an agent's true enterprise readiness.

Research Context: The Need for a Structured Enterprise-Focused Benchmark

AI agent benchmarking is a vibrant field, with numerous benchmarks (like AgentBench, GAIA, WebArena, ToolBench) effectively evaluating foundational capabilities like web navigation, tool/API usage, and general reasoning – tasks largely corresponding to user-facing interactions and simple application integrations.

However, a significant gap exists for the full breadth of enterprise operations. While separate methods evaluate traditional automation (like RPA), there is a lack of standardized frameworks to holistically benchmark AI agents across the entire spectrum of enterprise complexity, especially as they tackle complex workflows, sensitive backend operations, and critical infrastructure management.

This is where our proposed framework offers a distinct contribution:

Therefore, this layered framework addresses the critical need for a more comprehensive, enterprise-grounded approach to understanding and comparing AI agent capabilities.

A Layered Model for Enterprise Tasks

To systematically evaluate agents, we conceptualize enterprise tasks across five distinct operational layers.

Why five layers? This structure is a pragmatic model designed to capture distinct, qualitative shifts in task complexity, integration methods, access control, and operational risk commonly found within enterprise environments. While finer granularity is possible, these five levels represent significant boundaries – from user-facing interactions, through API-based integrations and complex workflow orchestration, to privileged backend data operations and strategic infrastructure management. This provides sufficient detail to differentiate agent capabilities across key enterprise contexts without becoming overly complex for a benchmarking framework.

Based on this rationale, we define the following five layers:

Layer vs. Dimension Matrix: An Overview

The following matrix provides a high-level overview of how six key dimensions typically shift across these layers:

This matrix highlights the increasing demands regarding access, complexity, reliability, robustness, and maintainability as agents operate deeper within the enterprise.

Detailed Layer Descriptions with Examples

Let us explore each layer with three representative task examples, phrased as user requests:

Layer 1: UI-Level Tasks

Layer 2: Application Integration Tasks

Layer 3: Middleware-Level Orchestration Tasks

Layer 4: Data Operations & Backend Automation Tasks

Layer 5: Strategic & Infrastructure Automation Tasks

This layering provides a clear progression, acknowledging that success with simpler tasks doesn't automatically translate to competence with more complex, critical ones.

Other Dimensions for Comprehensive Benchmarking

While the matrix highlights six key dimensions, a truly comprehensive evaluation should also consider other factors like Data Sensitivity, Autonomy & Decision-Making, Observability & Transparency, Scalability & Resource Efficiency, and Integration Complexity. Contextually relevant factors such as Security Posture, Compliance & Auditability, and Ethical Considerations may also need explicit assessment.

Understanding the "Barrier to Entry"

A critical insight from this layered model is the concept of the "Barrier to Entry". This refers not just to a single obstacle, but to an escalating set of fundamental enterprise requirements and technical challenges that AI agents encounter as they attempt to operate beyond superficial user interactions, particularly in Layers 3 through 5. These barriers intensify significantly with system depth and data sensitivity. For instance, Security Constraints become exponentially more critical; the access required for backend data operations (Layer 4) or infrastructure control (Layer 5) demands far more rigorous authentication, authorization, vulnerability management, and threat modeling compared to basic UI tasks (Layer 1). Similarly, Compliance and Governance mandates (like SOX, HIPAA, GDPR) often apply most stringently to the core data processing and systems typical of deeper layers, requiring agents not just to perform tasks but to do so with verifiable, auditable proof of adherence – a significant challenge for potentially non-deterministic AI.

Furthermore, the demand for Outcome Determinism escalates sharply; while variability might be acceptable in drafting an email (Layer 1), core financial reporting (Layer 4) or infrastructure changes (Layer 5) often require absolute predictability and consistency. Integration Complexity typically increases when interacting with legacy systems, complex proprietary APIs, or direct database connections common in Layers 3-5, compared to more standardized web or SaaS APIs. Finally, expectations for Robustness and Error Handling become extremely high; a failure during a database update (Layer 4) or a cloud configuration change (Layer 5) has a vastly larger potential impact (the "blast radius") than a failed web search, necessitating sophisticated mechanisms like transactional integrity, fault tolerance, and even autonomous recovery, far beyond simple retries. Recognizing how these barriers compound and intensify across layers is essential for realistically assessing an agent's true enterprise readiness and for strategically investing in the capabilities needed to operate reliably and securely at deeper levels of the organization.

Operationalizing the Framework: A Path Forward

This framework can be operationalized via:

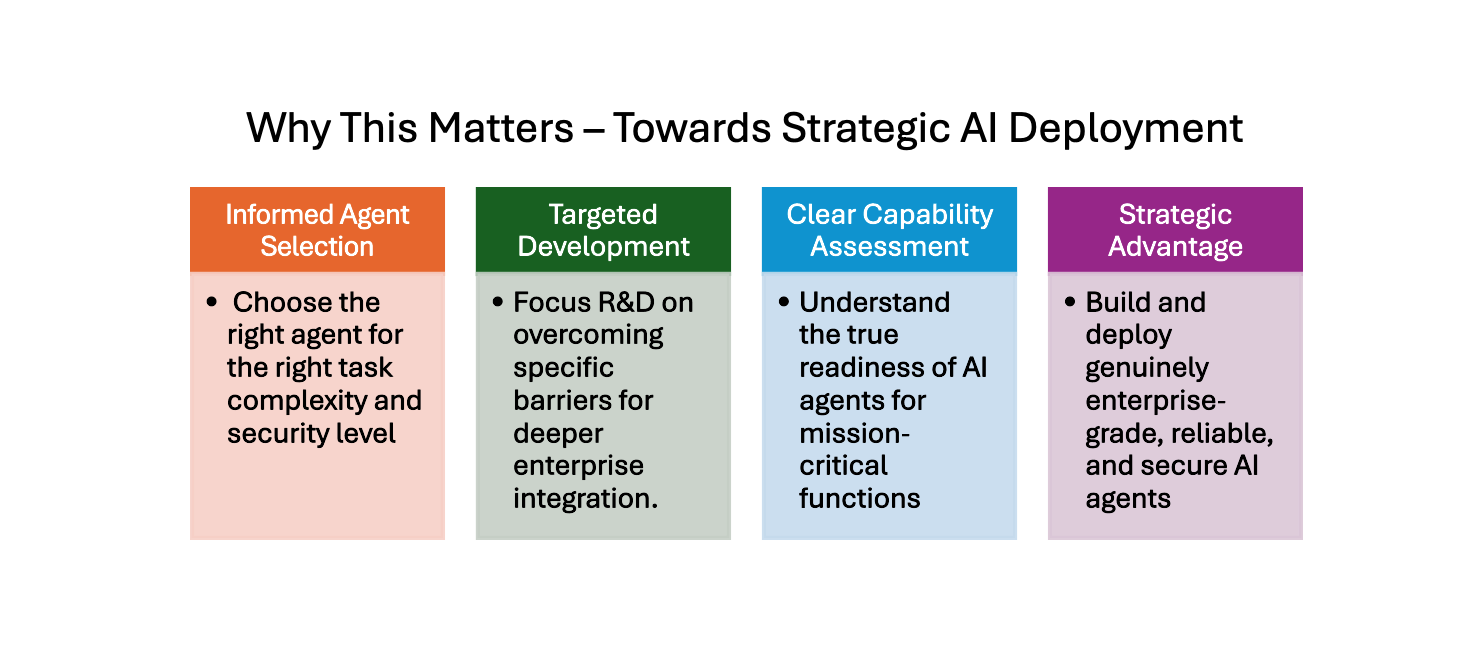

Why This Matters: Towards Strategic AI Deployment

Adopting a structured, layered benchmarking approach, potentially enabled by a dedicated sandbox, offers significant advantages.

By moving beyond generic evaluations and embracing a framework that reflects enterprise realities, we can unlock the true potential of AI agents, driving meaningful automation and innovation. This structured approach is crucial for building trust and ensuring the effective, secure, and scalable deployment of AI in the enterprise.

Discover why standard AI benchmarks fall short for enterprise needs and how agent performance is truly measured on realistic, multi-application workflows using both UI and APIs.

Emergence’s Agents platform is evolving into a system that can automatically create agents and assemble multi-agent systems withminimal human intervention.

Connecting downstream enterprise applications to the world of external services is crucial, but navigating the vast API landscape can feel like exploring an uncharted jungle.