The Anatomy of Agents

The Anatomy of Agents

The concept of a software agent can be traced back to the model Hewitt, et al. [1] proposed as representing a self-contained, interactive, and concurrently-executing object which was called an ‘actor.’ This object had some encapsulated internal state and could respond to messages from other similar objects: by Hewitt’s definition, an actor “is a computational agent which has a mail address and a behaviour. Actors communicate by message-passing and carry out their actions concurrently.”

The notion of agency (the idea that an object can go and carry out an action concurrently with other similar objects) eventually evolved into the concept of intelligent agents that used AI to perform some complex decision-making. However, these agents were still of lower capability than ours today, as they made tactical decisions at various points in workflows without keeping in mind a notion of some larger goal. Most recently, we’ve seen the advent of autonomous agents that maintain a larger goal, can sense their environments, and can react to the state of that environment in order to progress toward their goal.

One of the earliest definitions of an autonomous agent, given by [2], is: an autonomous agent is a system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to affect what it senses in the future.

Defining Characteristics

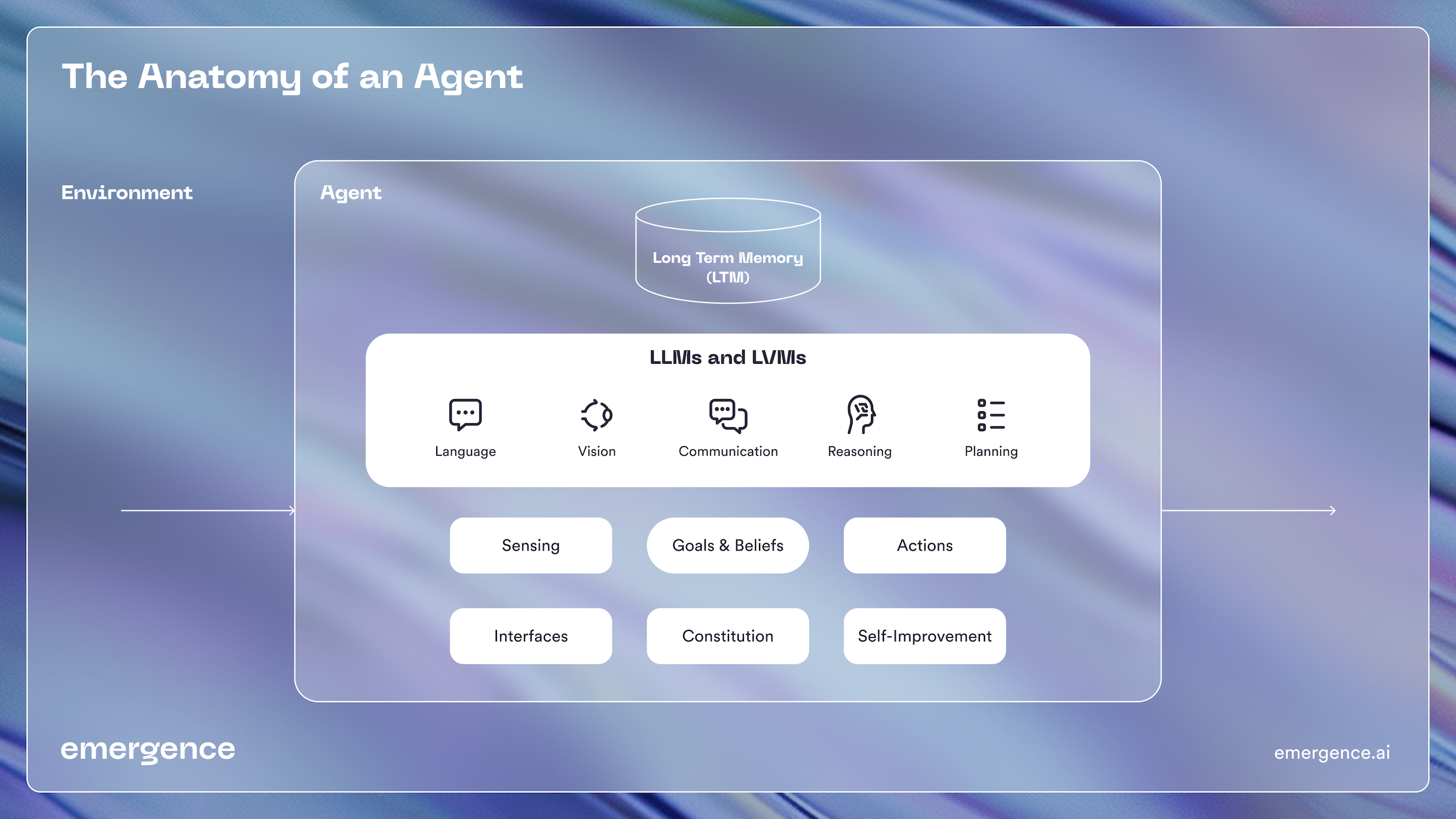

Agents have been defined in various ways and have been a point of discussion for some time now, especially after the introduction of large language models (LLMs) [3, 4, 5] and large vision models (LVMs) [6]. It is useful to have one encompassing definition that is extensible as well as expressive, building on commonly understood concepts from the past. For Emergence’s definition of our notion of an agent, we refer back to a few basic principles that embody an agent, and we use them to develop the notion of an “agent object” in the world of LLMs and LVMs. We’ve distilled the following primary concepts that need to be embodied by an agent object in order for them to be programmed scalably to build robust systems.

- Autonomy: An agent is an autonomous, interactive, goal-driven entity with its own state, behavior, and decision-making capabilities. It has the capability of self-improvement when it sees that it is unable to meet the performance parameters for reaching its goal.

- Reactivity and Proactivity: Agents can be both reactive, meaning they can sense their environment and respond to changes by taking actions, and be proactive, meaning they can take initiative based on their goals. Agents can take actions that change the state of their environment, which can ensure their progress towards their goal.

- Beliefs, Desires, and Intentions (BDI): A common model used in agent-oriented programming (AOP) is the BDI model, where agents are characterized by their beliefs (information about the world), desires (goals or objectives), and intentions (plans of action).

- Social Ability & Communication: Agents have a communication mechanism and can interact with other agents or entities in their environment. This interaction can be highly complex, as it may involve negotiation, coordination, and cooperation. We believe that with the advent of LLMs, this communication can be completely based on natural language, which is both human- and machine-understandable.

- Constitution: An agent needs to adhere to some regulations and policies depending on the imperatives of its task and goals. It needs to protect itself from being compromised or destroyed as well as be trusted not to harm other agents sharing the environment in which it is operating.

- Memory: An agent needs to have long-term memory (LTM) of its past interactions and successfully past means of completing its task. LTM can help to greatly reduce the amount of computing that an agent has to perform in order to complete a new task by referencing relevant past plans and actions. The memory is also the place where an agent can store human demonstrations it has seen, which can expedite its progress without as rigorous a planning and reasoning loop. An agent also has short-term memory, which is typically its current context signified by prompts and any information available in its context length.

Agent-Oriented Programming (revisited for the LLM era)

Programming paradigms such as object-oriented programming (OOP) evolved from the need to design and develop code that was highly reusable, maintainable, and scalable. Simula, which came out in the 60s, is generally thought to be the first OOP language. However, it was not until the 80s that the use of OOP concepts to develop large-scale enterprise-class software became mainstream, with the introduction of C++ (80s) and later Java (90s). Even though COBOL and FORTRAN were extremely popular in the 70s for enterprise software, their key strengths were as means of accounting (COBOL) and scientific programming (FORTRAN), not as general-purpose OOP languages. The true power and deployment of OOP software in enterprise came to the fore with the advent of Java and Java Beans.

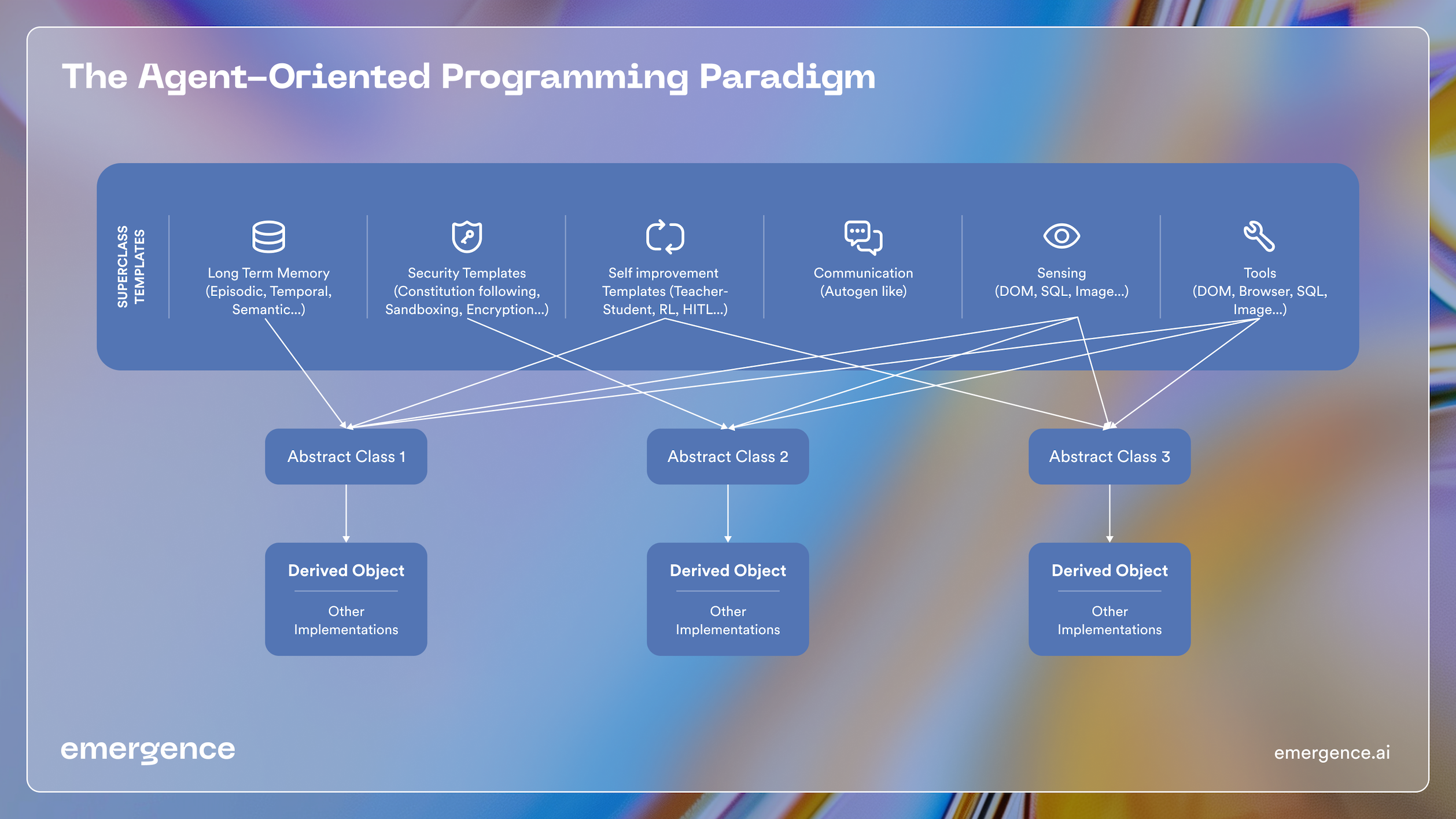

Similarly, we are on the cusp of another evolution in programming paradigms with LLM/LVM-driven systems that have the potential to completely change enterprise workflows. Recently, the community has been dabbling primarily in advanced experiments with LLM/LVM-driven systems. Now, with the validation of enterprise-scale possibilities, we need to extend the current agent-oriented programming (AOP) paradigms to include the power and capabilities of LLM/LVMs. These include capabilities as discussed above, such as advanced language understanding, communication with humans and other agents, self-improvement, various methods of “reasoning” (CoT, ToT, ReACT etc.), personalization and understanding of context based on LTM, and sensing, interpreting, and acting on environments through the use of tools. Several programming languages and frameworks have been developed specifically for AOP, such as AgentSpeak, JACK, and Jade. These frameworks provide structures and paradigms for creating agent-based systems, but they do not capture the new concepts of AOP made possible by LLM/LVMs. AOP is particularly useful in complex systems where individual components need to operate independently and interact in sophisticated ways, such as in multi-agent systems.

To enable efficient programming of agent-based systems, some of the base characteristics of an agent need to be available as superclass templates. Developers can derive from these and reuse concepts rapidly as well as maintain a semblance of uniformity in how agents are defined and programmed. [5] is an example of an agent communication and collaboration paradigm which has made it extremely easy to build multi-agent collaboration systems. However, many more base characteristics of agents need to be standardized and frameworks built to enable large-scale agent-oriented programming paradigms. A few of these are as shown in the figure below. For example, security templates are a must-have to ensure that agents are born with alignment to some basic constitution and rules of the game. Similarly, self-improvement is inherent to an agent since it needs to continuously improve itself on its task. However, self-improvement is connected to changing the capabilities of an agent, and we need to ensure that whatever tools, child agents, or code that an agent creates to self-improve, they adhere to some properties of goodness and the agent’s constitution. Therefore, alignment is very closely connected to self-improvement.

In conclusion, developing the right abstractions and templates for agent-oriented programming is important to ensure that we can build systems that can scale, that their components are reusable, and that they are interoperable and safe to operate. We believe that building this framework is going to be an extremely complex endeavor and that the best way to build it is in the open source community. We will be doing our part by subsequently releasing pieces of our larger framework into the community, in the hope of rallying like-minded developers around the project. In our next blog, we delve deeper into self-improvement, a characteristic fundamental to any agent, and its potential tradeoff with goals for alignment.

References

- Hewitt, C. (1977), “Viewing Control Structures as Patterns of Passing Messages”, Artificial Intelligence 8(3), 323-364.

- Franklin, S. and Graesser, A. (1997) Is It an Agent, or Just a Program? A Taxonomy for Autonomous Agents, In: Müller, J.P., Wooldridge, M.J. and Jennings, N.R., Eds., Intelligent Agents III Agent Theories, Architectures, and Languages, Springer, Berlin Heidelberg, 21-35.

- Shen, Y., Song, K., Tan, X., Li, D., Lu, W., & Zhuang, Y. (2024). Hugginggpt: Solving ai tasks with chatgpt and its friends in hugging face. Advances in Neural Information Processing Systems, 36.

- Task-driven Autonomous Agent Utilizing GPT-4, Pinecone, and LangChain for Diverse Applications

- Wu, Q., Bansal, G., Zhang, J., Wu, Y., Zhang, S., Zhu, E., … & Wang, C. (2023). Autogen: Enabling next-gen llm applications via multi-agent conversation framework. arXiv preprint arXiv:2308.08155.

- https://arxiv.org/pdf/2403.10517.pdf

More From the Journal

Agentic AI for Enterprise Needs Much More than LLMs

Agentic AI Automation of Enterprise: Verification before Generation